Every other day a new language model drops and I give it this chess puzzle to see if it spits out the correct answer, just for lulz 🤣 I am yet to receive a correct answer in one shot.

Last week I tried guiding DeepSeek-R1 to the eventual result- from wrong FEN position parsing, to illegal moves and eventually just providing it with the answer and convincing it for the reasoning part. Most models just love repeating - pawn to queen.

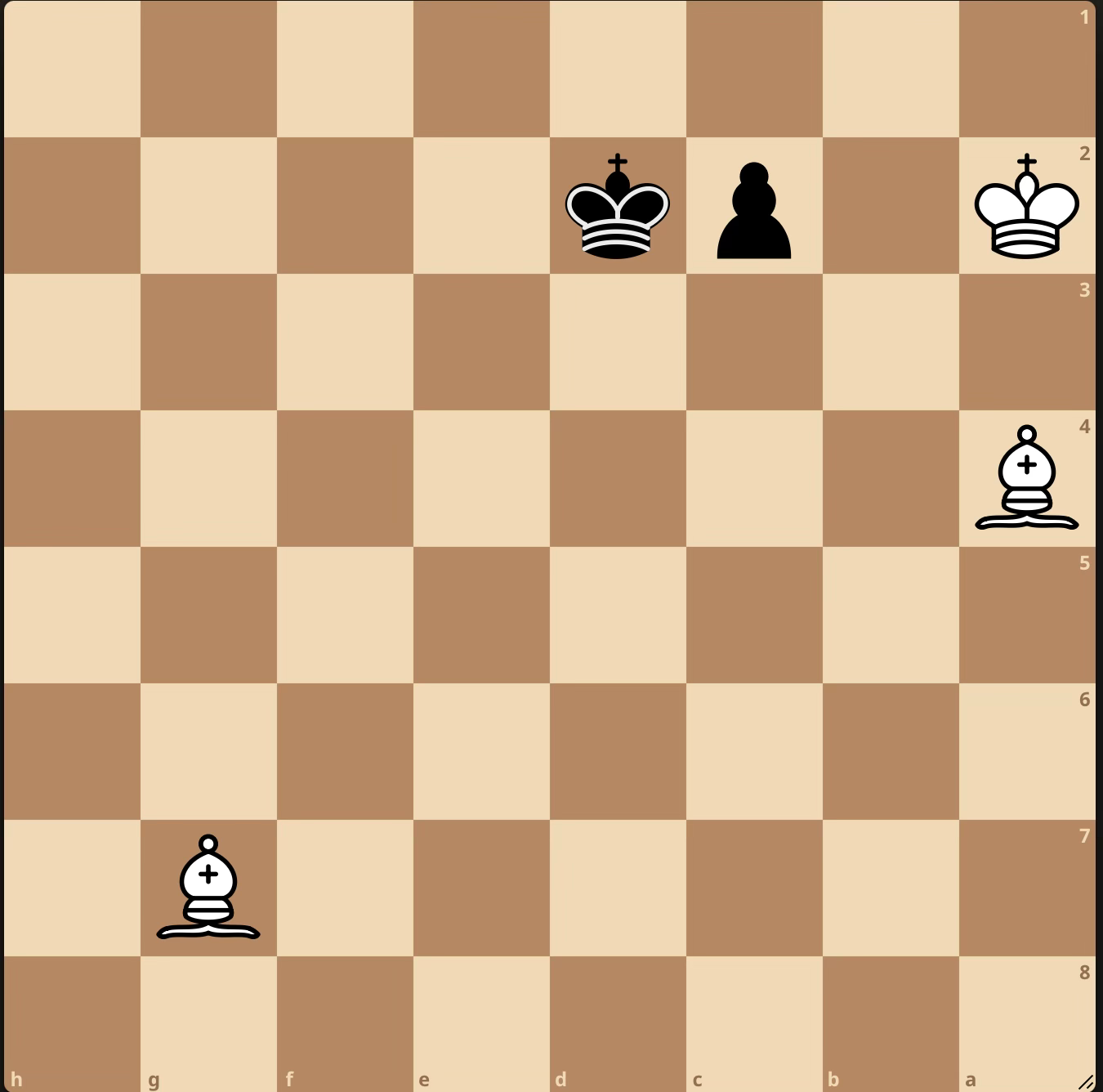

The text prompt in FEN for the puzzle to feed to LLMs - What should black play 8/6B1/8/8/B7/8/K1pk4/8 b - - 0 1

There is a lot of literature about LLMs not being able to play chess properly, which is expected. Though this did not stop folks from organizing an LLMs chess championship this month where bots ate their own pieces, brought back dead knights and had short term memory losses about the board positions.

But why this specific puzzle and not an actual benchmark? This is quick to test before an actual bench and the next move seems intuitively easy for an average chess player. It also has only five pieces- endgames of chess with up to seven pieces are solved and assuming perfect play, can be stored for quick lookup (also called tablebase). The optimal data structure for upto 5 pieces takes less than 1GB of space. It shoots up to ~16TB for 7 pieces. The ability to just lookup cached values in a real engine also removes the need to do an actual tree search like stockfish which is much to ask from an LLM, though I did try reasoning with 4o to simulate a minimax with alpha-beta pruning but it still parroted wrong stuff forgetting things like forced moves.

This puzzle also uses an uncommon motif of under-promotion, where you lose by promoting the pawn to the most powerful piece, a queen. Even with the knowledge of under-promotion and tablebase, the LLM would still need to know the 50-move draw rule of practical play to decide on the result.

While almost all of the strongest chess engines use some form of neural net today - the strongest networks of Leela Chess Zero are now transformer-based which outperform the older convolution-based architectures by almost 300 Elo points or at the very least use NNUE like in stockfish, it would be amusing to see a general purpose LLM play chess on a master level or more in the future without any specific work. Or solve basic puzzles like this.

A childhood curiosity about computers has led me to explore several avenues of computer science. The use of chess as a modeling testbed for hard problems, and also as the end product to be worked on has been a joy to watch. One of the reasons why I tried learning it so late in life and played my first officially rated tournament last year. (And I got a trophy!)

(Removed from the LLMs snubbing chess noise, a chess related paper that caught my attention recently was about evidence of learned look-ahead in the policy network of Lc0, and extending the argument to maybe use it for future mesa-optimization tests - https://arxiv.org/pdf/2406.00877 )